Beyond Slop: The Deep Structures of Image Synthesis

A practice-led reflection on image technics in the age of the GPU.

In 2012, I made my first CGI artwork, entitled Z. Inspired by the Occupy movements of 2011 and commissioned by UK arts organisation Animate Projects, it was broadcast on late-night Channel 4 in the UK and reflected a deep fascination I’d developed with z-depth or depth map images: monochromatic renders that visualise distance along the Z-axis (near to far) within a virtual scene. As with all my films, it’s free to watch on my website. I’m almost certain that Z is the first artwork to focus on z-depth images and is probably one of the first films to explore the technical underbelly of computational photography1, though admittedly, there still aren’t that many. I’ve written about the film before and so have others - most notably media archaeologist Jacob Gaboury2 - but I’d like to sketch out the genesis of the piece and use it to connect the last twenty years of synthetic imaging developments to some of the cutting-edge advancements in generative AI production.

On the Animate Projects website, the original accompanying text for Z is preserved:

Is hindsight 20:20? Does history reveal or obscure the truth? Can we predict the future? Will history repeat itself? Are we in control?3

As you can tell, I had many questions, and not so many answers. I had a gut feeling that z-depth images were culturally significant, but it’s only now - thirteen years later with a Masters and a PhD under my belt - that I find it easier to make connections to the kind of critical theory that would have helped me position the work more effectively: Foucault’s episteme4, Bernard Stiegler’s tertiary memory5, Martin Jay’s scopic regimes6 or Benjamin’s angel of history7. All these writers address how our concepts of past, present and future are variously filtered through the lens of technology, power and ideology. I sensed this when making the film, but I wasn’t so invested in theory then - I’d rebounded from a fine arts degree with an antipathy towards deskilled arts practice, and Z remedied this, emerging from a hands-on engagement with new technology and a fascination with what lay beneath the rendered photorealistic image.

The film was completed at the end of a formative period working full time in a London animation studio, before which I’d completed an intensive course in 3D animation. On that course, we’d barely rendered anything - in fact, it was only in the last week that students were guided towards the render frame button and finally saw wireframe bones and shells transform, incrementally, into impossible photographs. But the thing about renders is that they never come out quite right, which is probably why the tutor held off on rendering so long: tweaking them becomes addictive quickly. And so, in 2010, when I was hired as a Junior 3D Generalist and began working in the bro-filled image factories of post-production and 3D animation, I began indulging this addiction. Slowly, I became attenuated to render logics and temporalities: the job involved constant testing, client feedback and a knowledge of the subtle differences in render engines, render farms, lighting algorithms and sampling settings. An increment of 0.001 in an obscure sub-sub-menu could mean the difference between a render failing or succeeding, so this a was high-stakes activity, the dark art of (quickly) conjuring light from numbers.

This work - sometimes termed ‘render wrangling’ - not only changes how you experience visual culture, but how you relate to time. Prior to the GPU revolutions of 20178, you had to wait minutes, hours, days, or weeks to see what your 3D scene looked like. Renders are a bit like boomerangs in this sense - they return with a delay that structures the focus and rhythms of a working day9. Indeed, this is why industrial post-production abides by international ‘follow-the-sun’ production schedules, where work never stops and why the individual artist often sets renders off overnight10 . And if you do this enough, your dreams start to become infiltrated by render anxiety. Will the render farm throw up an error? Were your sampling thresholds too low or high? Will your images have ‘acne,’ ‘jaggies’ or ‘fireflies’ when you get to your desk in the morning? Your body starts to sync with the metabolism of the pipeline, you start to obsess over sampling and lighting settings, 32bit EXRs or global illumination techniques.

This is how you gradually sink into a deeper understanding of rendering, and - in a nutshell – it explains the phenomenological experience that led to making Z, but it’s also a window into a kind of image technics (a term I outlined in a previous post) that is under-theorised, fascinating and crucial to a deeper understanding of the future trajectories of generative AI (which is, at the end of the day, just a new kind of rendering).

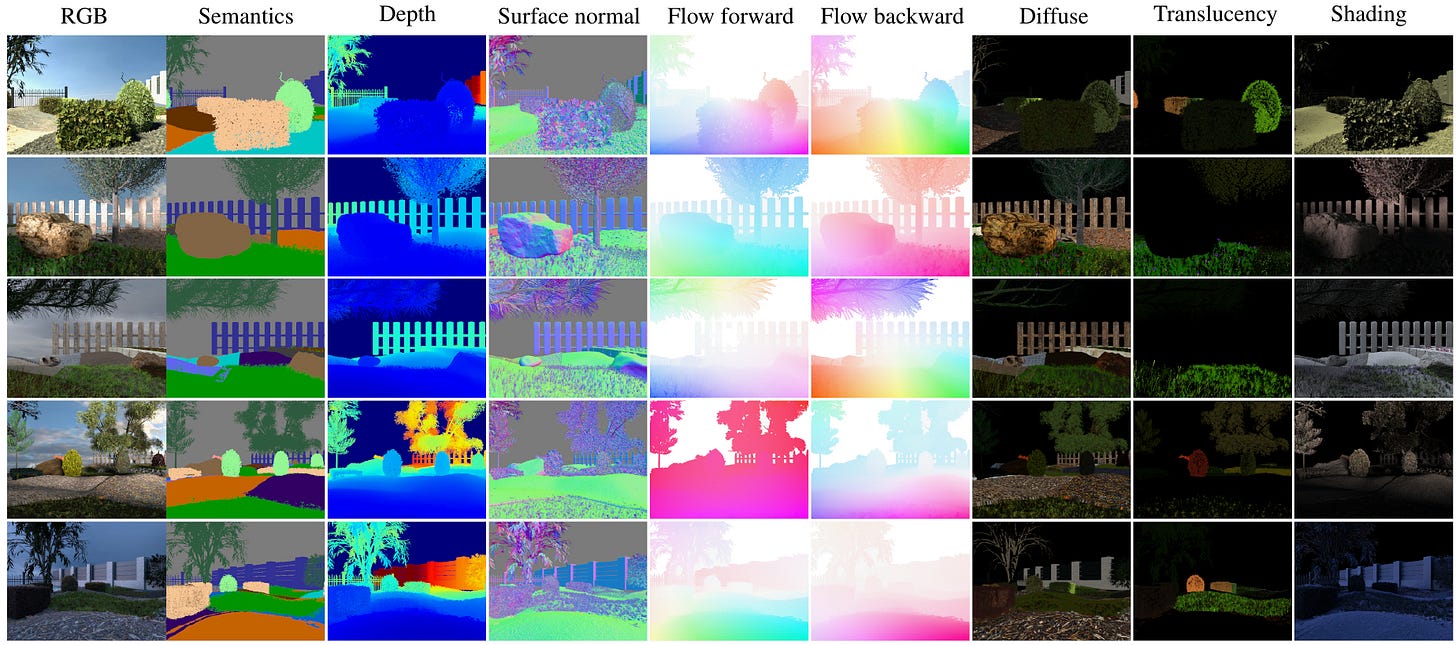

The z-depth image is one of several synthetic modalities that power the ControlNet models used in ComfyUI, that are routinely extracted from smartphone sensor data, stored or shared as ‘anonymised’ datasets or deployed quietly by predictive off-the-shelf AI imaging apps. Other visual registers include motion vectors, pose information, normal maps, mattes, segmentation maps and ‘canny’ outlines. They’re designed to store rich combinations of spatial, optical, mathematical, semantic and computational data as colour/transparency values and – if studied – can really help communicate how machines see. It is through registers like these that we can glimpse how all forms of image data are interpreted within machine-learning pipelines. This is how platform capital comes to know the world through images.

Despite this, the average scholar of media has little knowledge of these computational substrates, which, in CGI discourse, are known alternately as render elements, render passes, deferred shading, screenspace shaders or AOVs (arbitrary output variables). While they’re not as accessible as the ‘glitched’ images that so many visual theorists consider the sole symbol of digital materiality, they’re also not as inaccessible to human perception as the n-dimensional vectors of latent space. As such, render elements are a valuable middle ground between the human and the machine, a portal into thinking about synthetic images in productive and nuanced ways. What’s more, they help connect the strange ontologies of generative AI to a long history of image synthesis – histories that if read carefully, would counter the prevailing sense that latent diffusion models came from nowhere and landed at the centre of visual culture like hermetic alien spaceships.

As well as pointing forwards to generative AI, a closer attendance to the evolution of CG rendering technologies also helps connects us back to photography. Whereas the magic trick of photography is to channel the light of free-flowing life through an aperture and onto film or a CCD, there is no such light, life or alchemy in CGI11. Yet what many don’t realise is that we still have apertures. The job of the graphics processing unit (GPU) - and more specifically, the frame buffer - is to mitigate processing bottlenecks. It’s a bit like when you move into a new apartment and you have to take a sofa or wardrobe apart to get it up the stairs: the frame buffer’s purpose is to striate, serialise, cull, section and stratify an unwieldy non-phenomenological ‘blob’ of complex data into a production-friendly format. GPUs solve the central problem of CGI, which - as media-theory daddy Friedrich Kittler12 suggests - is not depicting complexity or making things look real. No, the problem is that it can imagine more than it can draw, and it draws slowly. The solution is to offload, distribute, decentralise and decompose data processing through computational architecture13.

If we take seriously this idea of the GPU as a data aperture, we must note that it’s not modelled after the physiological form of the eye (as cameras are) but rather operates more like a mouth or a gullet. This is why, for me, GPUs are like hungry ghosts14, more specifically needle mouths (針口鬼): insatiable entities cursed to forever nibble at a world they’d prefer to consume in one bite. Extending the metaphor, these hungry ghosts require their food cut into tiny pieces, and this is what the GPU multithreading and frame buffering does, by opening millions of tiny multithreaded apertures which can both consume and digest visual data in manageable bites.

The reason I’m pushing this metaphor is primarily because it’s hard for most digital scholars, artists, curators, journalists or writers to grasp how deeply GPU architectures have structured visual culture. GPUs were designed to solve rendering bottlenecks to in games, animation, and visual effects, but – surprise! – found their most potent deployment in supercharging the powerful transformer models from which generative AI emerged. And not only that – after decades of reciprocal adaptations from CG workers, the render elements that helped siphon rich data from the nonphenomenological ‘blob’ of the virtual 3D scene are now used within cutting-edge generative AI applications like ComfyUI and ControlNet to break apart the equally blobby ‘AI slop’ or ‘pixel soup’15 of the statistical GenAI image.

My point, if indeed I have one, is projects like Z exist in a limbo between one phase of imaging and another, and they tell a story of continuity rather than rupture, situating AI-assisted machine vision as a longstanding evolution of image synthesis that has complex historical, phenomenological, ontological and epistemic dimensions. When talking about AI and synthetic images, I find it useful to think in the space between the impenetrable paradigms of latent space and more old-school ideas of compositing or Photoshop layering. Hybrid images demand hybrid methods and attending to the deeper substrates that underpin the construction of synthetic images16 helps us access a novel ontology of posthuman visuality defined by the affordances of the GPU. Indeed, given that NVIDIA is currently the most profitable company on the planet, I might now answer some of the questions posed by Z more confidently: we are living in the age of the GPU, and neither the artist, media theorist nor cultural critic can afford to ignore the complex visual forms it fosters.

Don’t quote me.

Cardoso Llach, D. and Vardouli, T. (2023) Designing the Computational Image, Imagining Computational Design (Exhibition Catalogue). Applied Research and Design Publishing.

https://animateprojectsarchive.org/films/by_date/2012/z

Foucault, M (2006) The order of things: an archaeology of the human sciences. London: Routledge.

Stiegler, B. (1998) Technics and Time: The Fault of Epimetheus. Translated by Beardsworth, R. and Collins, G. Stanford, California: Stanford University Press.

Jay, M. (1988) 'Scopic Regimes of Modernity' In: Foster, H. (ed.) Vision and Visuality. Seattle: Bay Press. pp.3–23.

https://www.sfu.ca/~andrewf/CONCEPT2.html

Pascal and Volta were the big architectures in this period. They brought cinematic real-time rendering into reach for consumer PCs (4K gaming, VR), while also serving as workhorses for AI labs. Many early CNNs, GANs, and even AlphaGo were trained on Pascal GPUs.

As Adrian Mackenzie suggests, this kind of work entails “rhythms, produced by synchronizations and delays, [that] involve repetitions, cycles and feedback loops working against, with, through or independent of other repetitions, cycles, and beats.” Mackenzie, A. (2002, p84) in Transductions: bodies and machines at speed. London: Continuum.

See Marcus Verhagen’s discussion of my work Sprites I-IV in Verhagen, M. (2023) Viewing Velocities: Time in Contemporary Art. London: Verso Books.

Photography orphans light from its source, loses spatial information and flattens the phenomenological world into disparate slices of data. CGI can preserve all that data through render passes. It’s a metacamera.

“It is only in the name of impatience that all existing computer graphics are based on idealizations” Kittler, F. A. (2001) 'Computer Graphics: A Semi-Technical Introduction' Translated by Ogger, S. In: Grey Room (2) pp.30–45.

Gaboury, J. (2021) Image objects: an archaeology of computer graphics. Cambridge, Massachusetts: The MIT Press.

I like to think that my coinage of the term ‘pixel soup’ in 2023’s Wizard of AI had some bearing on the later (and better) AI slop, which organically emerged in early 2024.

There’s an untold history of depth maps, normal maps and deferred shading that begins in the mid-1980s and extends through to the early 2000s. Nobody has written it, and if anyone wants to fund a postdoc, let me know!